Cycle 3: The Sound Station

Posted: December 11, 2023 Filed under: Arvcuken Noquisi, Final Project, Isadora | Tags: Au23, Cycle 3 Leave a comment »Hello again. My work culminates into cycle 3 as The Sound Station:

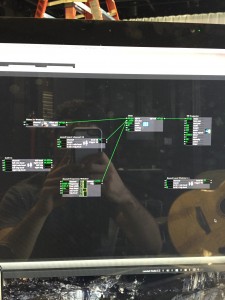

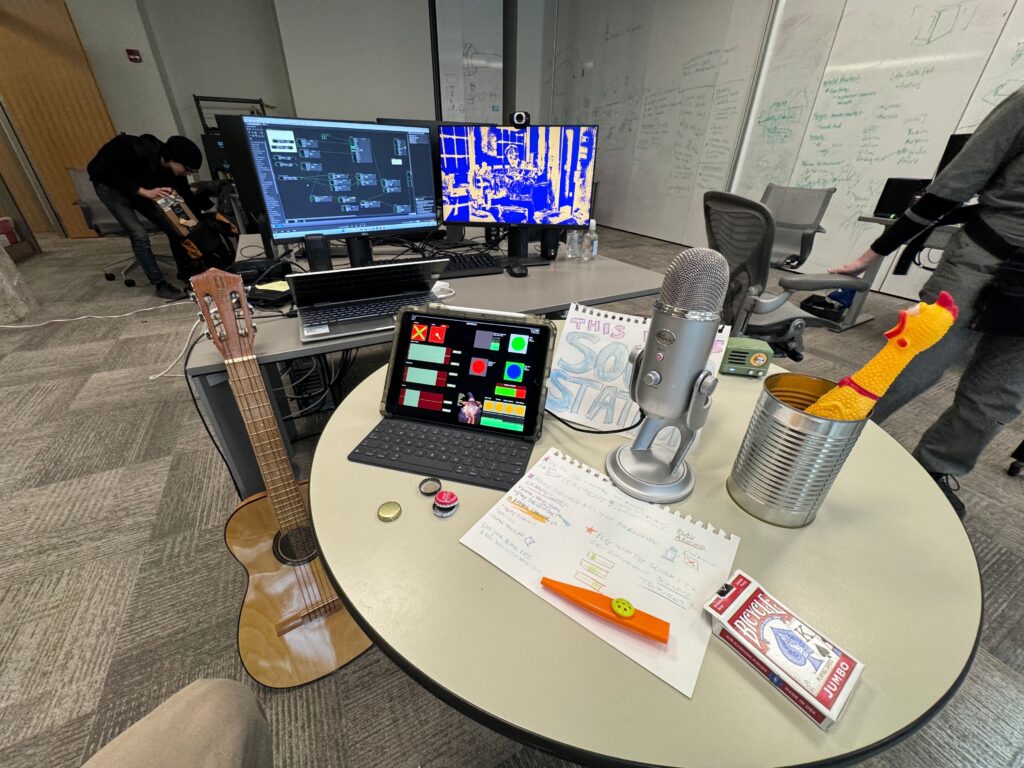

The MaxMSP granular synthesis patch runs on my laptop, while the Isadora video response runs on the ACCAD desktop – the MaxMSP patch sends OSC over to Isadora via Alex’s router (it took some finagling to get around the ACCAD desktop’s firewall, with some help from IT folks).

I used the Mira app on my iPad to create an interface to interact with the MaxMSP patch. This meant that I had the chance make the digital aspect of my work seem more inviting and encourage more experimentation. I faced a bit of a challenge, though, because some important MaxMSP objects do not actually appear on the Mira app on the iPad. I spent a lot of time rearranging and rewording parts of the Mira interface to avoid confusion from the user. Additionally I wrote out a little guide page to set on the table, in case people needed additional information to understand the interface and what they were “allowed” to do with it.

Video 1:

The Isadora video is responsive to the both the microphone input and the granular synthesis output. The microphone input alters the colors of the stylized webcam feed to parallel the loudness of the sound, going from red to green to blue with especially loud sounds. This helps the audience mentally connect the video feed to the sounds they are making. The granular synthesis output appears as the floating line in the middle of the screen: it elongates into a circle/oval with the loudness of the granular synthesis output, creating a dancing inversion of the webcam colors. I also threw a little slider in the iPad interface to change the color of the non mic-responsive half of the video, to direct audience focus toward the computer screen so that they recognize the relationship between the screen and the sounds they were making.

The video aspect of this project does personally feel a little arbitrary – I would definitely focus more on it for a potential cycle 4. I would need to make the video feed larger (on a bigger screen) and more responsive for it to actually have any impact on the audience. I feel like the audience focuses so much more on the instruments, microphone, and iPad interface to really necessitate the addition of the video feed, but I wanted to keep it as an aspect of my project just to illustrate the capacity MaxMSP and Isadora have to work together on separate devices.

Video 2:

Overall I wanted my project to incite playfulness and experimentation in its audience. I brought my flat guitar (“skinned” guitar), a kazoo, a can full of bottlecaps, a deck of cards, and miraculously found a rubber chicken in the classroom to contribute to the array of instruments I offered at The Sound Station. The curiosity and novelty of the objects serves the playfulness of the space.

Before our group critique we had one visitor go around for essentially one-on-one project presentations. I took a hands-off approach with this individual, partially because I didn’t want to be watching over their shoulder and telling them how to use my project correctly. While they found some entertainment engaging with my work, I felt like they were missing essential context that would have enabled more interaction with the granular synthesis and the instruments. In stark contrast, I tried to be very active in presenting my project to the larger group. I lead them to The Sound Station and showed them how to use the flat guitar, and joined in making sounds and moving the iPad controls with the whole group. This was a fascinating exploration of how group dynamics and human presence within a media system can enable greater activity. I served as an example for the audience to mirror, my actions and presence served as permission for everyone else to become more involved with the project. This definitely made me think more about what direction I would take this project in future cycles, if it were for group use versus personal use (since I plan on using the maxMSP patch for a solo musical performance). I wonder how I would have started this project differently if I did not think of it as a personal tool and instead as directly intended for group/cooperative play. I probably would have taken much more time to work on the user interface and removed the video feed entirely!

Pressure Project 1: “About Cycles”

Posted: September 13, 2023 Filed under: Isadora, Pressure Project I | Tags: Au23, Isadora, Pressure Project, Pressure Project One Leave a comment »Recording of the Pressure Project:

Motivations:

- Learn about Isadora

- General features/ways to create in Isadora

- Ways to organize objects in Isadora

- Ways to store information in Isadora

- Control flow

- Engage with the subject of “Cycles”

- This class uses cycles as an integral component of its processes. Therefore, focusing on the idea of cycles for this first project seemed fitting.

- Because this project encouraged the use of randomness to create something that “plays itself” (once you start it) and holds the viewer’s attention for some time, playing with indefinite cycles seemed appropriate.

- Find a “Moment of Joy”

- The “Side Quest” for this project was to invoke laughter or a moment of joy.

- When I started my 6 hours for this project, I was in a little bit of a bad mood. Consequently, I was not in the mood to create something joyful. Therefore, I decided to challenge myself to take something negative and turn it into something positive—since this artwork would feel more authentically “joyful” to me if that “joy” came from a genuine determination to find that joy within an honest acknowledgement of both the good and bad.

How the Project Supports those Motivations:

- The Storyline

- The beginning portion employs Sisyphean imagery to convey feelings of being trapped in cycles and not making any noticeable progress.

- I experimented with the number of times this scene would play to try to play it just enough times that the viewer could think that this scene was all there would be—a psychological trick that would hopefully invoke negative feelings corresponding to this theme.

- Since the animation was relatively abstract (there was no person figure included, for example), I was glad to hear from the people who watched it in class that they realized that this reference was being made.

- Eventually, it is revealed that the rocks that are rolling backwards are rolling backwards into somewhere new and exciting.

- The rock is traveling to these new places over bridges created by other rocks that had arrived there before. (I am not sure from the audience response whether this part came through, consciously or subconsciously. If I were to continue working on this project, I would change what the rocks look like to make it more obvious that the bridge is made of those rocks specifically.)

- This animation of the traveling rock cycles indefinitely, with the background effects randomized each time. (This, combined with the effect of the Sisyphean section of changing the location after the viewer starts to think that section will be the only one, had the interesting effect of causing the in-class audience to not be sure for some time that this section would repeat indefinitely. While this has some obvious drawbacks, it does arguably complement some of the themes of this piece.)

- While I want each viewer to come to their own personal interpretation of this piece, I am hoping it can encourage viewers to consider the idea that, even if we cannot see it, in the cycles in our lives we are stuck in—even the ones where the negative effects far outweigh the positive ones—we still get something each time, even if that is just a little bit more knowledge that we can use to get just a bit further next time.

- The beginning portion employs Sisyphean imagery to convey feelings of being trapped in cycles and not making any noticeable progress.

- The Technical Aspects

- This project did give me the opportunity to experiment with a variety of Isadora “Actors.” My favorite one (which this project used for special effects and textures) was the “Explode” Actor.

- I used User Actors (which I found could be used similarly to classes from more traditional object-oriented coding languages) frequently to keep things more organized and to limit the amount of copied and pasted Actors.

- I experimented with Global Values (which have some similarities to global variables from more traditional programming), the Calculator Actor, the Comparator Actor, Actors that handled Triggers, and the Jump++ Actor for control flow, especially to repeat Scenes.

- I tried to automatically set the Global Value at the start of the show, but some unknown aspect of my logic was incorrect, so I did have to remember to manually set it each time I played the show.

- Much of the control flow resulting in the final effect on the Stage could have been accomplished with just the Counter Actor, Actors that handled Triggers, and the Jump++ Actor. However, I specifically wanted to learn about Global Values using this project, and there is some symbolism involved in the fact the Scene itself truly does fully repeat (rather than just a set of steps within the Scene). This does raise an interesting question about how the way something is done can in itself be part of an artwork—but is that worthwhile when it takes more time, is less clean, and ultimately results in the same user/viewer experience?

Isadora File Documentation/Download:

WORK > PLAY > WORK > PLAY >>>

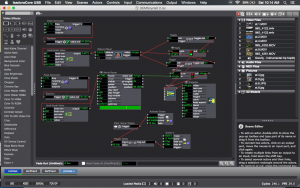

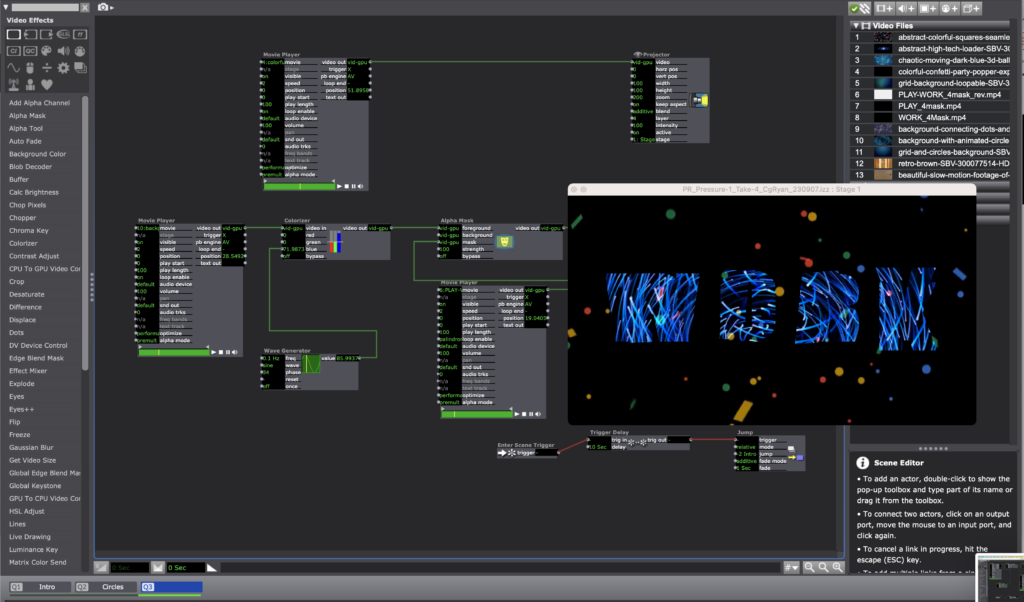

Posted: September 12, 2023 Filed under: CgRyan, Isadora, Pressure Project I, Uncategorized | Tags: Isadora, Pressure Project, Pressure Project One Leave a comment »My goals for Pressure Project #1 were to deepen and broaden skills working in Isadora 3 and to create a motion piece that could hold attention. The given Resources were 6 hours and a minimum of using defined Actors: Shape, Projector, Jump++, Trigger Delay, and the Envelope Generator, and that would auto-play.

PROCESS

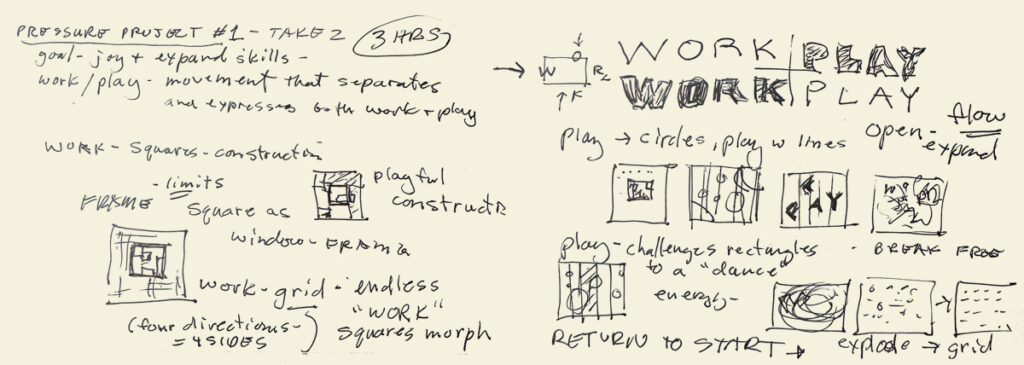

Pressure Project 1: TAKE 1 took 3 hours, and though I learned the basics of scene transition and shape control and video placement, I was not satisfied with how the scenes progressed and connected: I had not taken the time to create a defined concept. (When feeling “time pressured” I sometimes forget what one of my most respected design teachers at ArtCenter said “To save time, take the time to create a concept first.”)

Pressure Project #1 – TAKE 1

CONCEPT

I am both a designer and an artist, and creating a balance between personal work [PLAY] and paid client projects [WORK] has been an ongoing battle over my careers. I decided I wanted to symbolize this “dance” between WORK and PLAY in my motion piece. Conceptually, I think of WORK as a rectangle, or a “frame” that defines the boundaries of both Resources and what the Valuation criteria are. When I think of PLAY, I think of circles, more open and expansive, and playful. When I think of the combination of these 2 concepts, I see a choreography between grace and collision, satisfied expectations and for serendipity. When deciding on the pacing and transitions of the whole, I wanted to create an “endless loop” between WORK and PLAY that symbolized my ubiquitous see-saw between the two poles. An endless loop would represent “no separation” between WORK and PLAY: my live-long goal of to have work that feels like PLAY, and to WORK meaningfully at my PLAY so that it is worth the currency of my life force: time and energy.

Concept: WORK > PLAY sketch

ADDITIONAL TUTORIALS

I found an additional Isadora tutorial “Build it! Video Porthole” by Ryan Webber that demonstrated skills I wanted to learn: how to compose video layers with masking and alpha channels, and another demo of the User Actor. From this tutorial, I was inspired to use the Alpha Mask actor to make the WORK > PLAY > repeat cycle explicit. I decided to use the letters of the two “four letter words” to explicitly to represent my history as a communications designer, and to alternate between a more structured motion path using the squares and a more playful evolution as the piece transitioned to circles, then a finale of a combination.

LIMITED REMAINING TIME: 2.5 hours

To respect the time limit of the project, I used an application that I use in both personal art “play” and client work, After Effects, to quickly create three simple animations with the WORK + PLAY letters to use with the Alpha Mask actor.

After Effects alpha masks

To save additional time, I used a combination of motion pattern videos I have used in earlier projection mapping projects. I used the Shapes and Alpha Mask actors in Isadora to combine the elements.

Scene 1 – 3 stills: videos in alpha masks

To create the endless “loop” transitions between the three scenes, I used the Trigger Delay actor on each of the three scenes, using the jump value of “-2” to return to scene one.

Scene 3: Trigger Delay and Jump++ to return to Scene 1: “-2”

To export the Isadora project to video to post online, I used the Capture Stage to Movie actor. I look forward to the next Pressure Project!

‘You make me feel like…’ Taylor – Cycle 3, Claire Broke it!

Posted: December 14, 2016 Filed under: Final Project, Isadora, Taylor Leave a comment »towards Interactive Installation,

the name of my patch: ‘You make me feel like…’

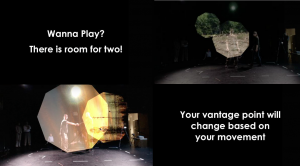

Okay, I have determined how/why Claire broke it and what I could have done to fix this problem. And I am kidding when I say she broke it. I think her and Peter had a lot of fun, which is the point!

So, all in all, I think everyone that participated with my system had a good time with another person, moved around a bit, then watched themselves and most got even more excited by that! I would say that in these ways the performances of my patch were very successful, but I still see areas of the system that I could fine tune to make it more robust.

My system: Two 9 sided shapes tracked 2 participants through space, showing off the images/videos that are alpha masked onto the shapes as the participants move. It gives the appearance that you are peering through some frame or scope and you can explore your vantage point based on your movement. Once participants are moving around, they are instructed to connect to see more of the images and to come closer to see a new perspective. This last cue uses a brightness trigger to switch to projectors with live feed and video delayed footage playing back the performers’ movement choices and allowing them to watch themselves watching or watching themselves dancing with their previous selves dancing.

The day before we presented, with Alex’s guidance, I decided to switch to the Kinect in the grid and do my tracking from the top down for an interaction that was more 1:1. Unfortunately, this Kinect is not hung center/center of the space, but I set everything else center/center of the space and used Luminance Keying and Crop to match the space and what the Kinect saw. However, because I based the switch, from shapes following you to live feed, on brightness when the depth was tracked at the side furthest from the Kinect the color of the participant was darker (video inverter was used) and the brightness window that triggered the switch was already so narrow. To fix this I think shifting the position of the Kinect or how the space was laid out could have helped. Also, adding a third participant to the mix could have made (it even more fun) the brightness window greater and increased the trigger range, so that the far side is no longer a problem.

I wonder if I should have left the text running for longer bouts of time, but coming in quicker succession? I kept cutting the time back thinking people would read it straight away, but it took people longer to pay attention to everything. I think this is because they are put on display a bit as performers and they are trying to read and decipher/remember instructions.

The bout that ended up working the best, or going in order all the way through as planned was the third go-round, I’ll post a video of this one on my blog which I can link to once I can get things loaded. This tells me my system needs to accommodate for more movement because there was a wide range of movement between the performers (maybe with more space, or more clearly defined space). Also, this accounting for the time taken exploring I have mentioned above.

Cycle 2_Taylor

Posted: December 3, 2016 Filed under: Assignments, Isadora, Taylor Leave a comment »This patch was created to use in my rehearsal to give the dancers a look at themselves while experimenting with improvisation to create movement. During this phase of the process we were exploring habits in relation to limitations and anxiety. I asked the dancers to think about how their anxieties manifest physically calling up the feelings of anxiety and using the patch to view their selves in real time, delayed time, and various freeze frames. From this exploration, the dancers were asked to create solos.

For cycle two, I worked out the bugs from the first cycle.

The main thing that was different for this cycle was working with the Kinect and using a depth camera to track the space and shift from the scenic footage to the live feed video instead of using live feed and tracking brightness levels.

I also connected toggles and gates to a multimix to switch between pictures and video for the first scene using pulse generators to randomize the images being played. For rehearsal purposes, these images are content provided from the dancers’ lives, a technological representation of their memories. I am glad I was able to fix this glitch with the images and videos. Before they were not alternating. I was able to use this in my previous patch along with the Kinect depth camera to switch the images based on movement instead of pulse generators.

Cycle 2 responses:

The participants thought that not knowing that the system was candidly recording them was cool. This was nice to hear because I had changed the amount of video delay so that the past self would come as more of a surprise. I felt that if the participant was unaware of the playback of the delay that there interaction with the images/videos at the beginning would be more natural and less conscious of being watched (even though our class is also watching the interaction).

Participants (classmates) also thought that more surprises would be interesting. Like the addition of filters such as dots or exploding to live video feed, but I don’t know how this would fit into my original premise for creating the patch.

Another comment that I wrote down, but am still a little unsure of what they meant was dealing with the placement of performers and inquiring if multiple screens might be effective. I did use the patch projecting on multiple screens in my rehearsal. I was interesting how the performers were very concerned with the stages being produced letting that drive their movement but were also able to stay connected with the group in the real space because they could see the stages from multiple angles. This allowed them to be present in the virtual and the real space during their performance.

I was also excited about the movement of participants that was generated. I think I am becoming more and more interested in getting people to move in ways they would not normally and think with more development this system could help to achieve that.

link to cycle 2 and rehearsal patches: https://osu.box.com/s/5qv9tixqv3pcuma67u2w95jr115k5p0o (also has unedited rehearsal footage from showing)

Cycle 1… more like cycle crash (Taylor)

Posted: December 3, 2016 Filed under: Isadora, Taylor, Uncategorized Leave a comment »The struggle.

So, I was disappointed that I couldn’t get the full version (with projector projecting, camera, and a full-bodied person being ‘captured’) up and running. Even last week I did a special tech run two days before my rehearsal using two cameras (an HDMI connected camera and a web cam). I got everything up, running, and attuned to the correct brightness Wednesday and then Friday it was struggle-bus city for Chewie, then Izzy was saying my files were corrupted and it didn’t want to stay open. Hopefully, I can figure out this wireless thing for cycle 2 or maybe start working with a Kinect and a Cam?…

The patch.

This patch was formulated from/in conjunction with PP(2). It starts with a movie player and a picture player switching on&off (alternating) while randomly jumping through videos/images. Although recently I am realizing that it is only doing one or the other… so I have been working on how the switching back and forth btw the two players works (suggestions for easier ways to do this are welcome). When a certain range of brightness (or amount of motion) is detected from the Video In (fed through Difference) the image/vid projector switches off and the 3 other projectors switch on [connected to Video In – Freeze, Video Delay – Freeze, another Video In (when other 2 are frozen)]. After a certain amount of time the scene jumps to a duplicate scene, ‘resetting’ the patch. To me, these images represent our past and present selves but also provide the ability to take a step back or step outside of yourself to observe. In the context of my rehearsal, for which I am developing these patches, this serves as another way of researching our tendencies/habits in relation to inscriptions/incorporations on our bodies and the general nature of our performative selves.

The first cycle.

Some comments that I received from this first cycle showing were: “I was able to shake hands with my past self”, “I felt like I was painting with my body”, and people were surprised by their past selves. These are all in line with what I was going for, I even adjusted the frame rate of the Video Delay by doubling it right before presenting because I wanted this past/second self to come as more of a surprise. Another comment that I received was that the timing of images/vids was too quick, but as they experimented and the scene regenerated they gained more familiarity with the images. I am still wondering with this one. I made the images quick on purpose for the dancers to only be able to ‘grab’ what they could from the image in this flash of time (which is more about a spurring of feeling than a digestion of the image). Also, the images used are all sourced from the performers so they are familiar and these images already have certain meanings for them… Don’t quite know how the spectators will relate or how to direct their meaning making in these instances…(ideas on this are also welcomed). I want to set up the systems used in the creation of the work as an installation that spectators can interact with prior to the performers performing, and I am still stewing on through line between systems… although I know it’s already there.

Thanks for playing, friends!!!

Also, everyone is invited to view the Performance Practice we are working on. It is on Fridays 9-10 in Mola (this Friday is rescheduled for Mon 11.14, through Dec. 2), please come play with us… and let me know if you are planning to!

Pressure Project 1 __ Taylor

Posted: September 22, 2016 Filed under: Assignments, Isadora, Pressure Project I, Reading Responses, Taylor | Tags: #frogger:) Leave a comment »Under Pressure, dun dun dun dadah dun dun

Alright… so I got super frustrated fiddling around in Isadora and my system ended up being what I learned from my failures in the first go round. Which was nice because I was able to plan based on what I couldn’t and didn’t want to do, leading me to make simpler choices. It seemed like everyone was enthusiastically trying to grok how to interact with the system. It felt like it kept everyone entertained and pretty engaged for more than 3 minutes. ? Some of the physical responses to my system were getting up on the feet, flailing about, moving left to right and using the depth of the space, clapping, whistling, and waving. Some of the aesthetic responses to my system were that the image reminded them of a microscope or a city. I tried to use slightly random and short intervals of time between scenes and build off of simple rules and random generators (and slight variations of the like), in an attempt to distract the brain away from connecting the pattern. For a time it seemed this proved successful, but after many cycles and finding out the complexities were more perceived than programmed enthusiasm waned. I really enjoyed this project and the ideas of scoring and iterations that accompanied it.

Actors I jotted down, that I liked in others processes: {user input/output/ create inside user actor}{counter/certain#of things can trigger}{alpha channel/ alpha mask}{chroma keying/ color tracking}{motion blur / can create pathways with rate of decay ? }{gate / can turn things on/off}{trigger value}

Reading things:

media object- representation

interaction, character, performer

scene, prop, Actor, costume, and mirror

space & time | here, there, or virtual / now or then

location anchored to media (aural possibilities), instrumental relationship, autonomous agent/ responsive, merging to define identity (cyborg tech), “the medium not only reflects back, but also refracts what is given” (love this). “The interplay between dramatic function / space / time is the real power — expansive range of performative possibilities …< <107/8ish.. maybe>>

Checking In: Final Project Alpha and Comments

Posted: November 4, 2015 Filed under: Connor Wescoat, Isadora Leave a comment »Alpha:

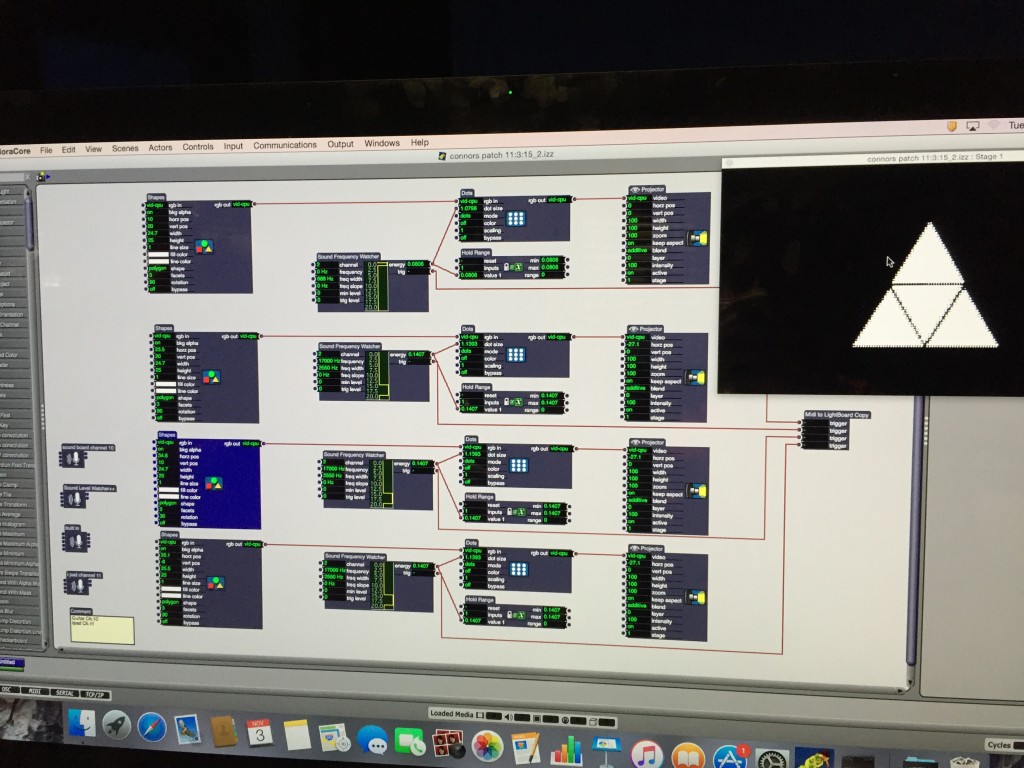

Today marked the first of our cycle presentation days for our final project. While I initially was stressing hard about this day, I was able to, with the help of some very important people, present a very organized “alpha” phase to my final project. While my idea initially took a lot of incubating and planning, I hashed it out and executed the initial connection/visual stage of my performance. Below is a sketch of my ideas for layouts, my beginning patch and my final alpha patch that I demonstrated in class today.

You will see the difference between my first patch and my second.

The small actor off to the right and bellow the image is a MIDI actor calibrated to the light board and my guitar. Sarah worked with me through this part and did a wonderful job explaining and visualizing this process.

Comments:

Lexi: Great start to your project! You made a really cool virtual spotlight using the Kinect. I know that the software may be giving you some initial troubles but I know you’ll be able to work through it and deliver an awesome final cut that incorporates your dance background. Keep it up!

Anna: I really like what you are doing with the Mic and Video connection. I believe that when it all comes together it will be something that’s really interactive and fun to play around with. I’m already having fun seeing how my voice influences the live capture. Keep it up!

Sarah: Words cannot describe how much you have helped me in this class and I look forward to working with you to not only complete but also OWN this project. I’m sure the final cut of your lighting project is going to be awesome and I look forward to working with you more.

Josh: How you implemented the Kinect with your personal videos is a very cool take on how someone can actually interact with video in a space. I cant wait to see what else you add to your project!

Jonathan: Dude, your project is practically self-aware! Even if you explained it to me, I would never know how you were able to do that. I look forward to seeing your final project. Keep it up!

PP3 Isadora Patch

Posted: October 7, 2015 Filed under: Isadora, Pressure Project 3, Sarah Lawler 1 Comment »Hi Guys!

Here’s our class patch so far for PP3!

CLASS_PP3 CV Patch_151007_1.izz

-Sarah

Computer Vision Patch Post

Posted: October 5, 2015 Filed under: Alex Oliszewski, Connor Wescoat, Isadora, Josh Poston, Sarah Lawler 2 Comments »

Screen Shots included within zip!!!!