Study in Movement – Final Project

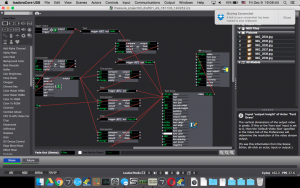

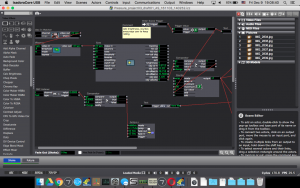

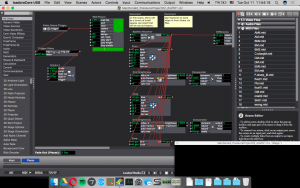

Posted: December 14, 2016 Filed under: Final Project, James MacDonald Leave a comment »For my final project, I wanted to create an installation that detected people moving in a space and used that information to compose music in real time. I also wanted to create a work that was not overly-dependent on the resources of the motion lab; I wanted to be able to take my work and present it in other environments. I knew what I would need for this project; a camera of sorts, a computer, a projector, and a sound system. I had messed around with a real-time composition library in the past by Karlheinz Essl, and decided to explore it once again. After a few hours of experimenting with the modules in his library, I combined two of them together (Super-rhythm and Scale Changer) for this work. I ended up deciding to use two kinect cameras (model 1414) as opposed to a higher resolution video camera, as the kinect is light-invariant. One kinect did not cover enough of the room, so I decided to use two cameras. To capture the data of movement in the space I used a piece of software called TSPS. For a while, I was planning on using only one computer, and had developed a method of using both kinect cameras with the multi-camera version of TSPS (one camera was being read directly by TSPS, and the other was sent into TSPS via Syphon by an application created in Max/MSP).

This is where I began running into some mild problems. Because of the audio interface I was using (MOTU mk3), the largest buffer size I was allowed to use was 1024. This became an issue as my Syphon application, created with Max, utilized a large amount of my CPU, using even more than the Max patch, Ableton, TSPS, or Jack. In the first two cycle performances, this lead to CPU-overload clicks and pops, so I had to explore other options.

I decided that I should use another computer to read the kinect images. I also realized this would be necessary as I wanted to have two different projections. I placed TSPS on the Mini Mac I wanted to use, along with a Max patch to receive OSC messages from my MacBook to create the visual I wanted to display on the top-down projector. This is where my problems began.

At first, I tried sending messages between the two computers over OSC by creating a network between the two computers, connected by ethernet. I had done this before in the past, and a lot of sources stated this was possible to do. However, this time, for reasons beyond my understanding, I was only able to send information from machine to another, but not to and from both of them. I then explored creating an ad-hoc wireless network, which also failed. Lastly, I tried connecting to the Netgear router over wi-fi in the Motion Lab, which also proved unsuccessful.

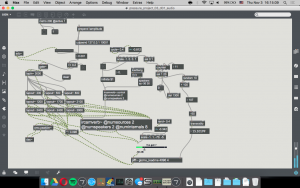

This lead me to one last option: I needed to network the two computers together using MIDI. I had a MIDI to USB interface, and decided I would connect it to the MIDI output on the back of the audio interface. This is when I learned that the MOTU interface does not have MIDI ports. Thankfully, I was able to borrow another one from the Motion Lab. I was able to add some of the real-time composition modules to the Max patch on the Mini Mac, so that TSPS on the Mini Mac would generate the MIDI information that would be sent to my MacBook, where the instruments receiving the MIDI data were hosted. This was apparently easier said than done. I was unable to set my USB-MIDI interface as the default MIDI output in the Max patch on the Mini-Mac, then ran into an issue where something would freeze up the MIDI output from the patch. Then, half an hour prior to the performance on Friday, my main Max patch on my MacBook completely froze; it was as if I paused all of the data processing in Max (which, while possible, is seldom used). This Max patch crashed, and I reloaded it, then reopened the on one the Mini Mac, adjusted some settings for MIDI CC’s that I thought were causing errors, and ten minutes after that, we opened the doors and everything worked fine without errors for two and a half hours straight.

Here is a simple flowchart of the technology utilized for the work:

MacBook Pro: Kinect -> TSPS (via OSC) -> Max/MSP (via MIDI) -> Ableton Live (audio via Jack) -> Max/MSP -> Audio/Visual output.

Mini Mac: Kinect -> TSPS (via OSC) -> Max/MSP (via MIDI) -> MacBook Pro Max Patch -> Ableton Live (audio via Jack) -> Max/MSP -> Audio/Visual output.

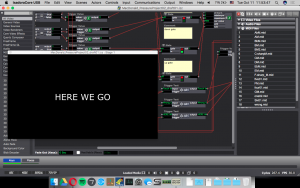

When we first opened the doors, people walked across the room, and heard sound as they walked in front of the kinects and were caught off-guard, and then stood still out of range of the kinects as they weren’t sure what just happened. I explained the nature of the work to them, and they stood there for another few minutes contemplating whether or not to run in front of the cameras, and who would do so first. After a while, they all ended up in front of the cameras, and I began explaining more of the technical aspects of the work to a faculty member.

One of the things I was asked about a lot was the staff paper on the floor where the top-down projector was displaying a visual. Some people at first thought it was a maze, or that it would cause a specific effect. I explained to a few people that the reason for the paper was because the black floor of the Motion Lab sucks up a lot of the light from the projector, and the white paper helped make the floor visuals stand out. In a future version of this work, I think it would be interesting to connect some of the staff paper to sensors (maybe pressure sensors or capacitive touch sensors) to trigger fixed MIDI files. Several people also were curious about what the music on the floor projection represented, as the main projector had stave with instrument names and music that was automatically transcribed having been heard. As I’ve spent most of my academic life in music, I sometimes forget that people don’t understand concepts like partial-tracking analysis, and since apparently the audio for this effect wasn’t working, it was difficult for me to effectively get the idea across of what was happening.

During the second half of the performance, I spoke with some other people about the work, and they were much more eager to jump in and start running around, and even experimented with freezing in place to see if the system would respond. They spent several minutes running around in the space and were trying to see if they could get any of the instruments to play beyond just the piano, violin, and flute. In doing so, they occasionally heard bassoon and tuba once or twice. One person asked me why they were seeing so many impossibly low notes transcribed for the violin, which allowed me to explain the concept of key-switching in sample libraries (key-switching is when you can change the playing technique of the instrument by playing notes where there aren’t notes on that instrument).

One reaction I received from Ashley was that I should set up this system for children to play with, perhaps with a modification of the visuals (showing a picture of what instrument is playing, for example), and my fiance, who works with children, overheard this and agreed. I have never worked with children before, but I agree that this would be interesting to try and I think that children would probably enjoy this system.

For any future performances of this work, I will probably alter some aspects (such as projections and things that didn’t work) to work with the space it would be featured in. I plan on submitting this work to various music conferences as an installation, but I would also like to explore showing this work in more of a flash-mob context. I’m unsure when or where I would do it, but I think it would be interesting.

Here are some images from working on this piece. I’m not sure why WordPress decided it needed to rotate them.

And here are some videos that exceed the WordPress file size limit:

Pressure Project #3

Posted: December 9, 2016 Filed under: James MacDonald, Pressure Project 3, Uncategorized Leave a comment »At first, I had no idea what I was going to do for pressure project #3. I wasn’t sure how to make a reactive system based on dice. This lead to me doing nothing but occasionally thinking about it from the day it was assigned until a few days before it was due. Once I sat down to work on it, I quickly realized that I was not going to be able to create a system in five hours that could recognize what people rolled with the dice. I began thinking about other characteristics of dice and decided that I should explore some other characteristics of dice. I made a system with two scenes using Isadora and Max/MSP. The player begins by rolling the dice and following directions on the computer screen. The webcam tracks the players’ hands moving, and after enough movement, it tells them to roll the dice, of which the loud sound of dice hitting the box triggers the next scene, where various images of previous rolls appear, with numbers 1-6 randomly appearing on the screen, and slowly increasing in rapidity while delayed and blurred images of the user(s) fade in, until it sends us back to the first scene, where we are once again greeted with a friendly “Hello.”

The reactions to this system surprised me. I thought that I had made a fairly simple system that would be easy to figure, but the mysterious nature of the second scene had people guessing all sorts of things about my project. At first, some people thought that I had actually captured images of the dice in the box in real time because the first images that appeared in the second scene were very similar to how the roll had turned out. In general, it seemed like the reaction was overall very positive, and people showed a genuine interest in it. I think that I would consider going back and expanding on this piece more and exploring the narrative a little more. I think that it could be interesting to develop the work into a full story.

Below are several images from a performance of this work, along with screenshots of the Max patch and Isadora patch.

Cycle #1 – Motion-Composed Music

Posted: October 11, 2016 Filed under: James MacDonald Leave a comment »For my project, I would like to a create a piece of music that is composed in real time by the movement of users in a given space, utilizing multi-channel sound, Kinect data, and potentially some other sensors. For the first cycle, I am trying to use motion data as tracked by a Kinect using TSPS to control sounds in real time. I would like to develop a basic system that is able to trigger sounds (and potentially manipulate them) based on users’ movements in a space.

Pressure Project #2 – Keys

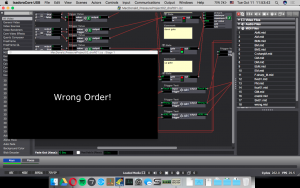

Posted: October 11, 2016 Filed under: James MacDonald, Pressure Project 2 Leave a comment »For my second pressure project, I utilized a makey makey, a kinect, and my computer’s webcam. In the first scene, the user was instructed to touch the keys that were attached to the makey makey while holding onto a bottle opener on a keyring that served as the earth. Each key triggered the playback of a different MIDI file. The idea was to get the user to touch the keys in a certain order, but the logic was not refined enough to require any specific order, but would instead either transition after so many key touches or display “Wrong Order!” and trigger an obnoxious MIDI file. The contrast of the MIDI files lead to laughter on at least one person’s part.

The second scene was set on a timer to transition back to the first scene. This scene featured a MIDI file that was constantly playing, and several that were triggered by depth sensor on the kinect, thus responding to not just one user, but everyone in the room. The webcam also added an alpha channel to shapes that were triggered when certain room triggers were initiated by the kinect. This scene didn’t have as strong as an effect as the first one, and I think part of that was due to instruction and Kinect setup. I programmed the areas that the kinect would use as triggers in the living room of my apartment, which has a lot of things that get in the way of the depth sensor, that were not present in the room this was performed. Another thing that would’ve benefited this scene would have been some instruction, as the first scene had fairly detailed instructions, and this one did not.

macdonald_pressure_project02_files

macdonald_pressure_project02_files

Pressure Project #1

Posted: September 16, 2016 Filed under: James MacDonald, Pressure Project I Leave a comment »For my first pressure project, I wanted to create a system that was entirely dependent on those observing it. I wanted the system to move between two different visual ideas upon some form of user interaction. I used the Eyes++ actor to control sizes, positions and explode parameters of two different shapes while using the loudness of the sound picked up by the microphone to control explode rates and various frequency bands to control the color of the shapes. The first scene, named “Stars,” uses two shapes, sent to four different explode actors, with one video output of the shapes actor being delayed by 60 frames. The second scene, “Friends,”, uses the same two shapes, exploding in the same way, but this time, adds an alpha mask on the larger shapes of the incoming video stream with either a motion blur effect or a dots filter.

Before settling on the idea of using sound frequencies to determine color, I originally wanted to determine the color based on the video input. Upon implementing this, I found that my frame rate dropped dramatically, from approximately 20-24 FPS to 7-13 FPS, thus leading me to use the sound frequency analyzer actor instead.

While I was working on this project, I spent a lot of time sitting directly in front of my computer, often in public places such as the Ohio Union and the Fine Arts Library, which resulted in me testing the system with much more subtle movements and quieter audio input. In performance, everyone engaged with the system from much further back than I had the opportunity to work with, and at a much louder volume. Because of this, the shapes moved around the screen much more rapidly and lead to the first scene ending rather quickly and the second scene lasting much longer. The reason for this is because the transition into the second scene was triggered by a certain number of peaks in volume, whereas the transition back to the first scene was triggered by a certain amount of movement across the incoming video feed.

One of the things I would wish to improve on if I had more time would be to fine-tune the movement of shapes across the screen so they would seem a little less chaotic and a little more fluid. I also would want to spend some time trying to optimize the transition between the scenes, as I noticed a visible drop in frame rate.

Zip Folder with the Isadora file: macdonald_pressure_project01