Luma to Chroma Devolves into a Chromadepth Shadow-puppet Show

Posted: October 30, 2015 Filed under: Jonathan Welch | Tags: Jonathan Welch Leave a comment »I was having trouble getting eyes++ to distinguish between a viewer and someone behind the viewer, so I changed the luminescence to chroma with the attached actor and used “The Edge” to create a mask to outline each object, so eyes++ would see them as different blobs. Things quickly devonved into making faces at the Kinect.

The raw video is pretty bad. The only resolution I can get is 80 X 60… I tried adjusting the input, and the image in the OpenNI Streamer looks to be about 640X480, and there are only a few adjustable options, and none of them deal with resolution… I think it is a problem with OpenNI streaming.

But the depth was there, and it was lighting independent, so I am working with it.

The first few seconds are the patch I am using (note the outline around the objects), the rest of the video is just playing with the pretty colors that were generated as a byproduct.

Vuo + Kinect + Izzy

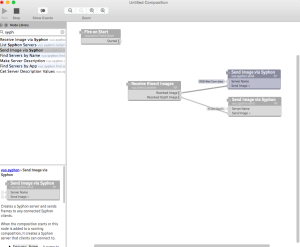

Posted: October 29, 2015 Filed under: Alexandra Stilianos, Pressure Project 3 Leave a comment »Screen caps and video of Kinect + Vuo + Izzy. Since we were working on the demo version on Vuo I couldn’t use video feed right from Isadora to practice tracking with the eyes and blob so a screen capture video was taken and imported.

We isolated a particular area in space that the kinect/vuo could read the depth as a gray scale to identify that shape

Patch connecting video of depth tracking to Izzy and using Eyes++ and Blob decoder, I could get exact coordinated for the blob in space.

https://www.youtube.com/watch?v=E9Gs_QhZiJc&feature=youtu.be

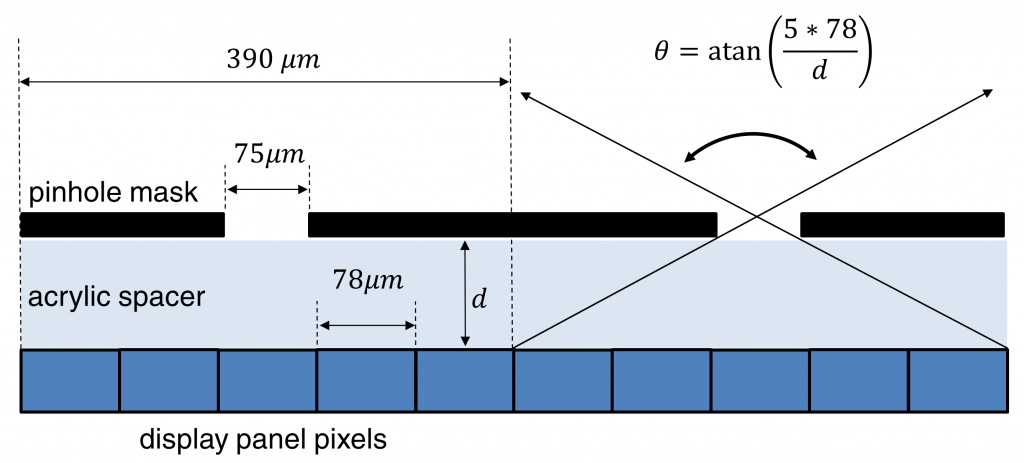

Final Project Progress

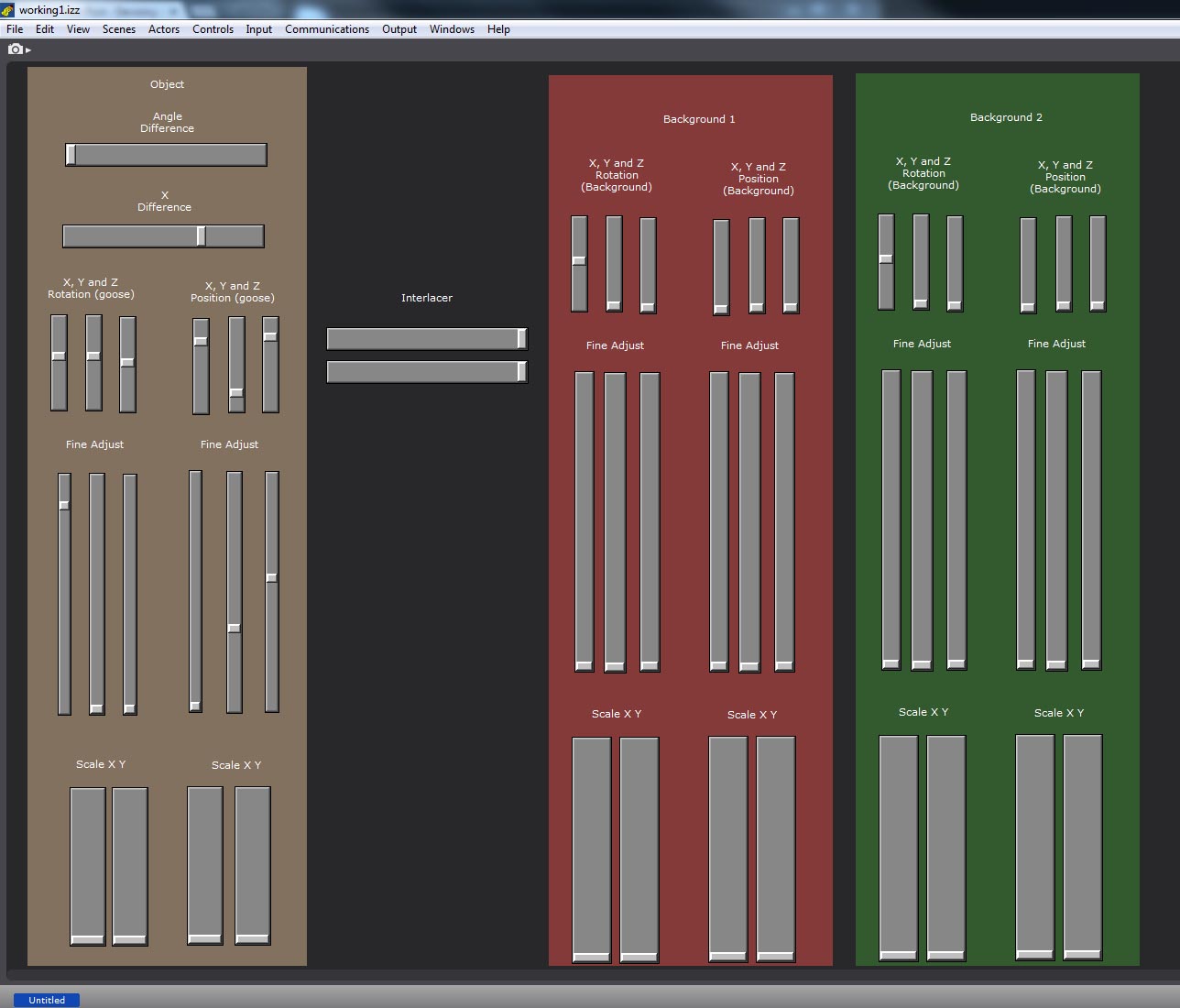

Posted: October 27, 2015 Filed under: Jonathan Welch, Uncategorized Leave a comment »I don’t have a computer with camera inputs yet, so I have been working on the 3D environment and interlacing. Below is a screenshot with the operator interface and a video testing the system. It is only a test, so the interlacing is not to scale and oriented laterally. The final project will be on a screen that is mounted in portrait. I hoped to do about 4 interlaced images, but the software is showing a serious lag with 2, so it might not be possible.

Operator Interface

(used to calibrate the virtual environment with the physical)

The object controls are on the Left (currently 2 views); Angle Difference (the relative rotation of object 1 vs object 2), X difference (how apart the virtual cameras are), X/Y/Z rotation with a fine adjust, and X/Y/Z translation with a fine adjust

The Backdrop controls are on the Right (currently 2 views, I am using mp4 files until I can get a computer with cameras); Angle Difference (the relative rotation of screen 1 vs screen 2), X/Y/Z rotation with a fine adjust, and X/Y/Z translation with a fine adjust.

In the middle in the interlace control (width of the lines and distance between, if I can get more than 1 perspective to work, I will change this to number of views and width of the lines)

Video of the Working Patch

0:00 – 0:01 changing the relative angle

0:01 – 0:04 changing the relative x position

0:04 – 0:09 changing the XYZ rotation

0:09 – 0:20 adjusting the width and distance between the interlaced lines

0:20 – 0:30 adjusting the scale and XYZ YPR of backdrop 1

0:30 – 0:50 adjusting the scale and XYZ YPR of backdrop 2

0:50 – 0:60 adjusting the scale and XYZ YPR of the model

I have a problem as the object gets closer and farther from the camera… One of the windows is a 3D projector, and the other is a render on a 3D screen with a mask for the interlacing. I am not sure if replacing the 3D projector with another 3D screen with a render on it would add more lag or not, but I am already approaching the processing limits of the computer, and I have not added the tuba or the other views… I could always just add XYZ scale controls to the 3D models, but there is a difference between scale and zoom, so it might look weird.

The difference between zooming in and trucking (getting closer) is evident in the “Hitchcock Zoom”

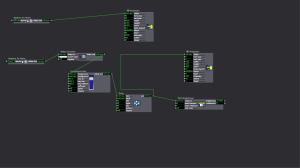

A Diagram with the Equations for Creating a Parallax Barrier

The First Tests

The resolution is very low because the only lens I could get to match with a monitor was 10 lines per inch lowering the horizontal parallax to just 80 pixels on the 8″ x 10″ lens I had. The first is pre-rendered 9 images. the 2nd is only 3 because Isadora started having trouble. I might pre-render the goose and have the responses trigger a loop (like the old Dragon’s Lair game). The draw back, the character would not be able to look at the person interacting. But with only 3 possible views, it might not be apparent he was tracking you.

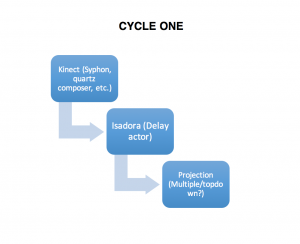

Ideation of Prototype Summary- C1 + C2

Posted: October 26, 2015 Filed under: Alexandra Stilianos, Assignments 1 Comment »My final project unfolds in 2 cycles that culminate to one performative and experiential environment where a user can enter the space wearing tap dance shoes and manipulate video and lights via the sounds from the taps and their movement in space. Below is an outline of the two cycles I plan to actualize this project.

Cycle one: Friederweiss-esque video follow

Goal: Create a patch in Isadora that can follow a dancer in space with a projection. When the dancer is standing still the animation is as well and as the performer accelerates through the space the animation leaves a trail that is proportionate to the distance and speed traveled.

Current state: I attempted to use Syphon into Quartz Composer to be able to read skeleton data from the Kinect to use in Isadora and met some difficulties installing and understanding the software. I referenced Jamie Griffiths blogs linked below.

http://www.jamiegriffiths.com/kinect-into-isadora

http://www.jamiegriffiths.com/new-kinect-into-syphon/

Projected timeline: 3-4 more classes to understand and implement software into Isadora and then create and utilize patch.

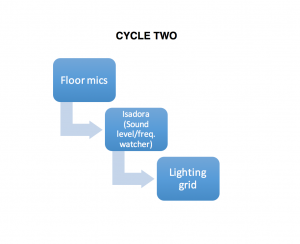

Cycle two: Audible tap interactions

Goal: Create environment that can listen to the frequency and/or amplitude of the various steps and sounds a tap shoe can create and have those interactions effect/control the lighting for the piece.

Current State: Not attempted this yet since still in C1 but my classmate Anna Brown Massey did some work with sound in our last class that may be helpful to me when I reach this stage.

Current questions: How do I get the software to recognize frequency in the tap shoes since the various step create different sounds. Is this more reliable or difficult than using volume alone, as it is definitely more interesting to me.

Projected timeline: 3+ classes, no use yet with this kind of software so a lot of experimentation expected.

PP3 Ideation, Prototype, and other bad poetry

Posted: October 25, 2015 Filed under: Anna Brown Massey, Final Project, Pressure Project 3 Leave a comment »GOALS:

- Interactive mediated space in the Motion Lab in which the audience creates performance.

- Interactivity is both with media and with fellow participants.

- Delight through attention, a sense of an invitation to play, platform that produces friendly play.

- Documentation through film, recording of the live feed, saving of software patches.

- Design requirements: can be scaleable for an audience of unexpected number, possibly entering at different times.

CONTENT:

Current ideas as of 10/25/15. Open to progressive discovery:

- A responsive sound system affecting live projection.

- Motion tracking and responsive projection as interactive

- How do sound + live projection and motion tracking + live projection intersect?

- Brainstorm of options: musical instruments in space; how do people affect each other; how can that be aggressive or friendly or something else; what can be unexpected for the director; could I find/use “FAO Schwartz” floor piano; do people like seeing other people or just themselves, how to take in data for sound that is not only decibel level but also pitch, timbre, rhythm; what might Susan Chess have to offer regarding sound, Alan Price, Matt Lewis.

RESOURCES

- 6+ Videocameras projecting live feed

- 3 projectors

- CV top-down camera

- Mutiple standing mics

- Software: Isadora, possibly DMX, Max MSP

VALUES i.e. experiences I would like my users (“audience” / “interactors” / “participants”) to have:

- uncertainty –> play –> discovery –> more discovery

- with constant engagement

Drawing from Forlizzi and Battarbee, this work will proceed by including attention to intersecting levels of fluent, cognitive, and expressive experience. A theater audience will be accustomed to a come-in-and-sit-down-in-the-dark-and-watch-the-thing experience, and a subversion of that plan will require attention to how to harness their fluent habits, e.g. audiences sit in the chairs that are thisclose to the work booth but if the chairs are this far then those must be allotted for the performance which the audience doensn’t want to disrupt. Which begs: how does an entering audience proceed into a theater space with an absence of chairs. Where are mics(/playthings!) placed under what light and sound “direction” that tells them where to go/what to do. A few posts ago in examining Forlizzi and Battarbee I posed this question, and it applies again here:

What methods will empower the audience to form an active relationship with the present media and with fellow theater citizens?

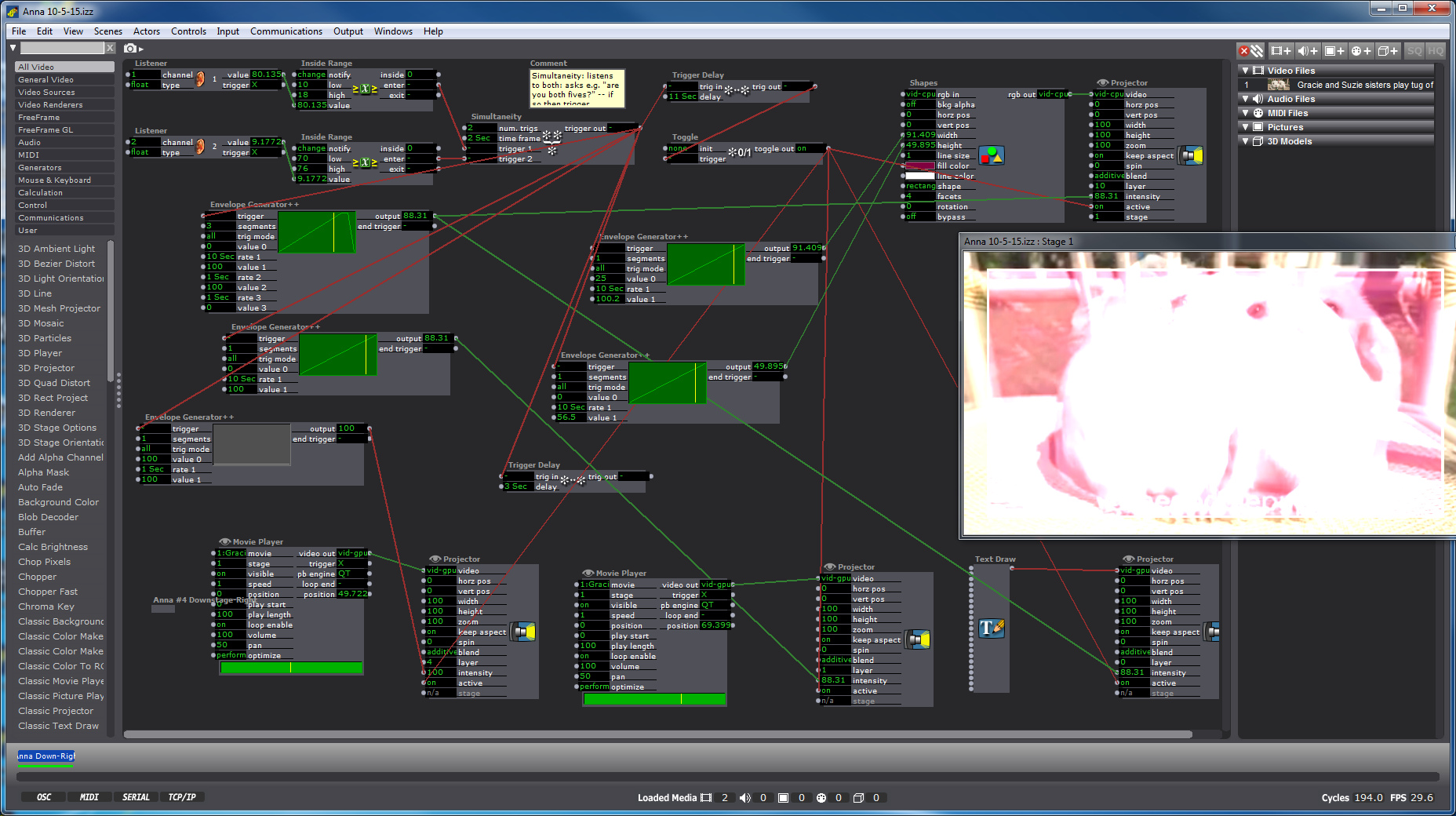

LAB: DAY 1

As I worked in the Motion Lab Friday 10/23 I discovered an unplanned audience: my fellow classmates. Seemingly busy with their own patches and software challenges, once they looked over and determined that sound level was data I had told Isadora to read and spit into affecting a live zoom of myself via the facetime camera the mac, I found they were, over the course of an hour frequently “messing” with my data in order to affect my projection. (I had set the incoming data of decibel level to alter the “zoom” level on my live projection.) They were loud, soft, laughing aggressively seeing the lowest threshold at which they could still affect my zoom output.

SO, discovery that decibel level affects the live projection of a fellow user seems, through this unexpected prototype due to the presence of my co-working colleagues, to offer an opportunity to find that SOUND AFFECTING SOMEONE ELSE’S PROJECTION ENGAGES ATTENTION OF USERS ALSO ENGAGED IN OTHER TASKS. okay, good. Moving forward …

Guinea Pigs

Posted: October 14, 2015 Filed under: Anna Brown Massey, Pressure Project 2 Leave a comment »Building an Isadora patch for this past project expanded my understanding of methods of enlisting CV (computer vision) to sense a light source (object) and create a projection responsive to the coordinates of that light source.

We (our sextet) selected the top down camera in the Motion Lab as the visual data our “Video In Watcher” would accept. As I considered the our light source, a robotic ball called the Sphero, manipulateable in movement via a phone application, I was struck by our shift from enlisting a dancer to move through our designed grid to employing an object. This illuminated white ball was served us not only because we were no longer dependent on a colleague to be present just to walk around for us, but also because its projected light and discreet size rendered our intake of data an easier project. We enlisted the “Difference” actor as a method of discerning light differences in space, which is a nifty way of distinguishing between “blobs.” Through this means, we could tell Isadora to recognize changes in light aka changes in the location of the Sphero, which gave us data about where the Sphero was so that our patches could respond to it.

My colleague Alexandra Stillianos wrote a succinct explanation for this method, explaining “Only when both the X and Y positions of the Sphero light source were toggled (switched) on, would the scene trigger, and my video would play. In other words, if you were in my row OR column, my video would NOT play. Only in my box (both row AND column) would the camera sense the light source and turn on, and when leaving the box and abandoning that criteria it would turn off. Each person in the class was responsible for a design/scene to activate in their respective space.”

The goal was to use CV (computer vision) to sense a light source (used by identifying the Sphero’s X/Y position in the space) to trigger different interactive scenes in the performance space.

Considering this newly inanimate object as a source, I discovered the “Text Draw” actor, and chose “You are alive” as the text to appear projected when the object moved into the x-y grid space indicated through our initial measurements. (Yes, I found this funny.) My “Listener” actor intercepted the channels “1” and “2” which we had our set-up in our CV frame to “Broadcast” the incoming light data, to which I applied the “Inside range” actor as a way of beginning to inform Isadora which data would trigger my words to appear. I did a quick youtube search of “slow motion,” and found a creepy guinea pig video that because of its single shot and stationary subjects, appeared to be possibly smooth fodder for looping. I layered two of these videos on a slight delay to give them a ghostly appearance, then added an overlay of red via the shapes actor.

As we imported our video/sound/image files to accompany our Isadora patches into the main frame, we discovered that our patches were being triggered, but were failing to end once the object had departed our specific x-y coordinates as demarcated for ourselves on the floor, but more importantly as indicated by our “Inside Range” actors. We realized that our initial measurements needed to be refined more precisely, and with that shift, my own actor was working successfully, but we were still faced with difficulty in changing the coordinates on my colleague’s actor as he had multiple user actors embedded in user actors that continued to run parts of his patch independently. The possibility of enlisting a “Shapes” actor measured to create a projection of all black was considered, but the all-consuming limitation on time kept us from proceeding further. My own patch was limited by the absence of a “Comparator” as a means of refining the coordinates so that they might toggle on and off.

Lexi PP3 Summary/reflection

Posted: October 12, 2015 Filed under: Alexandra Stilianos, Pressure Project 3 Leave a comment »I chose to write a blog post on this project for my main wordpress page for another dance related class –> https://astilianos.wordpress.com/2015/10/13/isadora-patches-computer-vision-oh-my/

Follow the link in wordpress to a different wordpress post for WP inception.

Myo to OSC

Posted: October 12, 2015 Filed under: Uncategorized Leave a comment »Hey there everyone.

Here is a video those missing steps of getting the Myo up and working:

And here are the links that you would need:

https://github.com/samyk/myo-osc for the Xcode project

https://www.myo.com/start/ for the Myo software

Remember, that this is a very similar process to getting the Kinect, or any number of other devices connected to your computer and Isadora.

Please let me know if you have any questions, or if you would like to borrow the Myo and try to do this yourself.

Best!

-Alex

Jonathan PP3 Patch and Video

Posted: October 12, 2015 Filed under: Pressure Project 3, Uncategorized | Tags: j, Jonathan Welch Leave a comment »

https://youtu.be/HjSSyEbz68Y

CLASS_PP3 CV Patch_151007_1.izz

PP3 Isadora Patch

Posted: October 7, 2015 Filed under: Isadora, Pressure Project 3, Sarah Lawler 1 Comment »Hi Guys!

Here’s our class patch so far for PP3!

CLASS_PP3 CV Patch_151007_1.izz

-Sarah