Cycle #1 – Motion-Composed Music

Posted: October 11, 2016 Filed under: James MacDonald Leave a comment »For my project, I would like to a create a piece of music that is composed in real time by the movement of users in a given space, utilizing multi-channel sound, Kinect data, and potentially some other sensors. For the first cycle, I am trying to use motion data as tracked by a Kinect using TSPS to control sounds in real time. I would like to develop a basic system that is able to trigger sounds (and potentially manipulate them) based on users’ movements in a space.

Pressure Project #2 – Keys

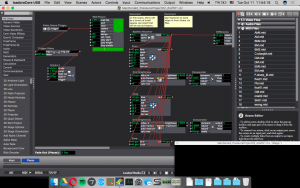

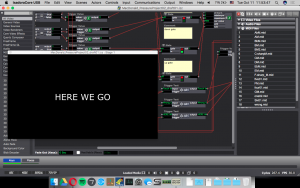

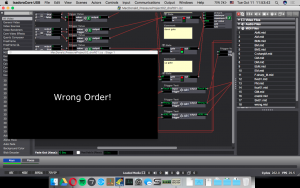

Posted: October 11, 2016 Filed under: James MacDonald, Pressure Project 2 Leave a comment »For my second pressure project, I utilized a makey makey, a kinect, and my computer’s webcam. In the first scene, the user was instructed to touch the keys that were attached to the makey makey while holding onto a bottle opener on a keyring that served as the earth. Each key triggered the playback of a different MIDI file. The idea was to get the user to touch the keys in a certain order, but the logic was not refined enough to require any specific order, but would instead either transition after so many key touches or display “Wrong Order!” and trigger an obnoxious MIDI file. The contrast of the MIDI files lead to laughter on at least one person’s part.

The second scene was set on a timer to transition back to the first scene. This scene featured a MIDI file that was constantly playing, and several that were triggered by depth sensor on the kinect, thus responding to not just one user, but everyone in the room. The webcam also added an alpha channel to shapes that were triggered when certain room triggers were initiated by the kinect. This scene didn’t have as strong as an effect as the first one, and I think part of that was due to instruction and Kinect setup. I programmed the areas that the kinect would use as triggers in the living room of my apartment, which has a lot of things that get in the way of the depth sensor, that were not present in the room this was performed. Another thing that would’ve benefited this scene would have been some instruction, as the first scene had fairly detailed instructions, and this one did not.

macdonald_pressure_project02_files

macdonald_pressure_project02_files

The Synesthetic Speakeasy – Cycle 1 Proposal

Posted: October 9, 2016 Filed under: Uncategorized Leave a comment »For our first cycle I’d like to explore narrative composition and storytelling techniques in VR. I’m interested in creating a vintage speakeasy / jazz lounge environment in which the user passively experiences the mindsets of the patrons by interacting with objects that have significance to the person they’re associated with! This first cycle will likely be experimentation with interaction mechanics and beginning to form a narrative.

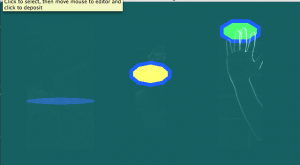

Peter’s Produce Pressure Project (2)

Posted: October 9, 2016 Filed under: Uncategorized Leave a comment »For our second pressure project, I used an Arduino (similar to a Makey-Makey) to allow for users to interact with a banana and orange as vehicles for generating audio. As the user touches a piece of fruit, a specific tone begins to pulse. Upon moving that fruit, it’s frequency increases with velocity. Then, if both pieces of fruit move quickly enough, geometric shapes on-screen explode! Once you let go of them, the shapes will reform into their geometric representations.

Our class’s reaction to the explosion was awesome; I wasn’t expecting it to be that great of a payoff 🙂

cycle 1 plans

Posted: October 4, 2016 Filed under: Uncategorized Leave a comment »Computer vision which tracks movement of 1 (maybe two) people moving in different quadrants (or some other word that doesn’t imply 4) of the video sensor (eyes or maybe use kinect to work with depth of movement as well), such that different combinations of movement trigger different sounds. (ie, lots of movement in upper half and stillness below triggers buzzing, reverse triggers a stampede or alternating up and down distal movement triggers sounds of wings flapping…. more to come if I can figure that out.

Pressure Project 2

Posted: October 4, 2016 Filed under: Uncategorized Leave a comment »

https://osu.box.com/s/7evtez7zjct0hg2ws4o9g2zlj04mb0qg

https://osu.box.com/s/7evtez7zjct0hg2ws4o9g2zlj04mb0qg

I started with a series of makey makey water buttons as a trigger for sounds and wanting to come up with different sequences of triggering to jump to different scenes. So if you touched the blue bowl of water then the green then yellow twice and then green again, through the calculator and inside range actors, you get moved to another scene. The made up the scenes trying to create variations on the colors and shapes that were part of the first scene, save for one, that was just 3 videos of the person interacting fragmented and layered with no sound. The latter was to make a interruption of sorts. BUT…. in practice, people triggered the water buttons so quickly that scenes didn’t have time to develop and the motion detection and interpretation from some of the scenes (affecting shape location and sound volume), didn’t become part of the experience. Considering now how to provide a frame for those elements to be more immediately explored, to slow people down in engaging with the water bowls whether via text or otherwise AND the possibility of a trigger delay to make time between each action.

PP2_Taylor__E.T. Touch/Don’t Touch__little green light…

Posted: October 4, 2016 Filed under: Assignments, Pressure Project 2, Taylor Leave a comment »So… I still don’t think my brightness component is firing correctly but feel free to try it out by shining your flashlight at the camera to see if it works for you. pp2-taylor-izz

I felt that the Makey Makey was just as limiting as it was full of possibilities… not the biggest fan, maybe if the cords were longer and grounding simpler (like maybe I could have just made a bracelet)

So, I believe the first response to my system from the peanut gallery was “What happens if you touch them at the same time”… my answer to this, I don’t know… it probably will explode your computer, but feel free to try.

other responses: Alice and wonderland feel, grounding is reminiscent of a birthday hat, voyeuristic — watching the individual interact with the system (and seeing their facial reactions from behind).

I wonder what reactions would be like if people interacted with it in singular experiences… like just walking up not really knowing anything. I was thinking about the power of the green light, and if this would compel people to follow or break the rules (our class is always just looking to break some shit – oh that’s what it does…what if…). All of the E.T. references came about from me just thinking touch — what’s a cool touch?

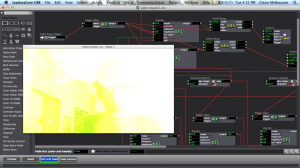

And the whole thing was a bastardization of a patch I was working on for my rehearsal… not done yet but here is the beginning of that.pp2-play4pp-izz