Final Project: Looking Across, Moving Inside

Posted: December 10, 2017 Filed under: Final Project, Uncategorized Leave a comment »Looking Across, Moving Inside

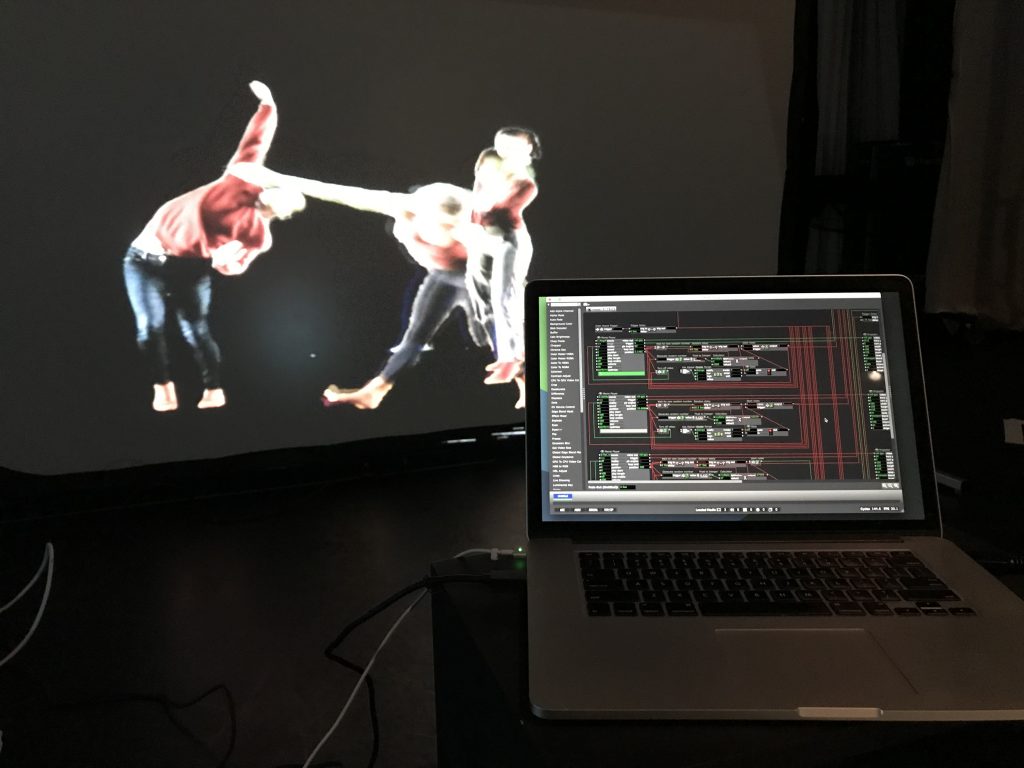

What are different ways that we can experience a performance? Erin Manning suggests that topologies of experience, or relationscapes, reveal the relationships between making, performing, and witnessing. For example, the relationship between writing a script or score, assembling performers, rehearsing, performing, and engaging the audience. Understanding these associations is an interactive and potentially immersive process that allows one to look across and move inside a work in a way that witnessing only does not. For this project, I created an immersive and interactive installation that allows an audience member to look across and move inside a dance. This installation considers the potential for reintroducing the dimension of depth to pre-recorded video on a flat screen.

Hardware and Software

The hardware and software required includes a large screen that supports rear projection, a digital projector, an Xbox Kinect 2, a flat-panel display with speakers, and two laptops—one running Isadora and the other running PowerPoint. These technologies are organized as follows:

A screen is set up in the center of the space with the projector and one laptop behind it. The Kinect 2 sensor is slipped under the screen, pointing at an approximately six-foot by six-foot space delineated by tape on the floor. The flat-panel screen and second laptop are on a stand next to the screen, angled toward the taped area.

The laptop behind the screen is running the Isadora patch and the second laptop simply displays a PowerPoint slide that instructs the participant to “Step inside the box, move inside the dance.”

Media

Generally, projection in dance performance places the live dancers in front of or behind the projected image. One cannot move to the foreground or background at will without predetermining when and where the live dancer will move. In this installation, the live dancer can move to up or downstage at will. To achieve this the pre-recorded dancers must be filmed singly with an alpha channel and then composited together.

I first attempted to rotoscope individual dancers out from their backgrounds in prerecorded dance videos. Despite helpful tools in After Effects designed to speed up this process, each frame of video must still be manually corrected, and when two dancers overlap the process becomes extremely time consuming. One minute of rotoscoped video takes approximately four hours of work. This is an initial test using a dancer rotoscoped from a video shot in a dance studio:

Abandoning that approach, and with the help of Sarah Lawler, I recorded ten dancers moving individually in front of a green screen. This process, while still requiring post-processing in After Effects, was significantly faster. These ten alpha-channeled videos then comprised the pre-recorded media necessary for the work. An audio track was added for background music. Here is an example of a green-screen dancer:

Programming

The Isadora patch was divided into three main function:

Videos

The Projector for each prerecorded video was placed on an odd numbered layer. As each video ends, a random number of seconds passes before they reenter the stage. A Gate actor prevents more than three dancers from being on stage at once by keeping track of how many videos are currently playing.

The Live Dancer

Brightness data was captured from the Kinect 2 (upgraded from the original Kinect for greater resolution and depth of field) via Syphon and fed through several filters in order to isolate the body of the participant.

Calculating Depth

Isadora logic was set up such that as the participate moved forward (increased brightness), the layer number on which they were projected increased by even numbers. As they moved backwards, the layer number decreased. In other words, the live dancer might be on layer 2, behind the prerecorded dancers on layers 3, 5, 7, and 9. As the live dancer move forward to layer 6, they are now in front of the prerecorded dancers on levels 3 and 5, but behind those on levels 7 and 9.

Download the patch here: https://www.dropbox.com/s/6anzklc0z80k7z4/Depth%20Study-5-KinectV2.izz?dl=0

In Practice

Watching people interact with the installation was extremely satisfying. There is a moment of “oh!” when they realize that they can move in and around the dancers on the screen. People experimented with jumping forward and back, getting low the floor, mimicking the movement of the dancers, leaving the stage and coming back on, and more. Here are some examples of people interacting with the dancers:

Devising Experiential Media from Benny Simon on Vimeo.

Devising Experiential Media from Benny Simon on Vimeo.

Devising Experiential Media from Benny Simon on Vimeo.

Future Questions

Is it possible for the live participant to be on more than one layer at a time? In other words, could they curve their body around a prerecorded dancer’s body? This would require a more complex method of capturing movement in real-time than the Kinect can provide.

What else can happen in a virtual environment when dancer move in and around each other? What configurations of movement can trigger effects or behaviors that are not possible in a physical world?