Guinea Pigs

Posted: October 14, 2015 Filed under: Anna Brown Massey, Pressure Project 2 Leave a comment »Building an Isadora patch for this past project expanded my understanding of methods of enlisting CV (computer vision) to sense a light source (object) and create a projection responsive to the coordinates of that light source.

We (our sextet) selected the top down camera in the Motion Lab as the visual data our “Video In Watcher” would accept. As I considered the our light source, a robotic ball called the Sphero, manipulateable in movement via a phone application, I was struck by our shift from enlisting a dancer to move through our designed grid to employing an object. This illuminated white ball was served us not only because we were no longer dependent on a colleague to be present just to walk around for us, but also because its projected light and discreet size rendered our intake of data an easier project. We enlisted the “Difference” actor as a method of discerning light differences in space, which is a nifty way of distinguishing between “blobs.” Through this means, we could tell Isadora to recognize changes in light aka changes in the location of the Sphero, which gave us data about where the Sphero was so that our patches could respond to it.

My colleague Alexandra Stillianos wrote a succinct explanation for this method, explaining “Only when both the X and Y positions of the Sphero light source were toggled (switched) on, would the scene trigger, and my video would play. In other words, if you were in my row OR column, my video would NOT play. Only in my box (both row AND column) would the camera sense the light source and turn on, and when leaving the box and abandoning that criteria it would turn off. Each person in the class was responsible for a design/scene to activate in their respective space.”

The goal was to use CV (computer vision) to sense a light source (used by identifying the Sphero’s X/Y position in the space) to trigger different interactive scenes in the performance space.

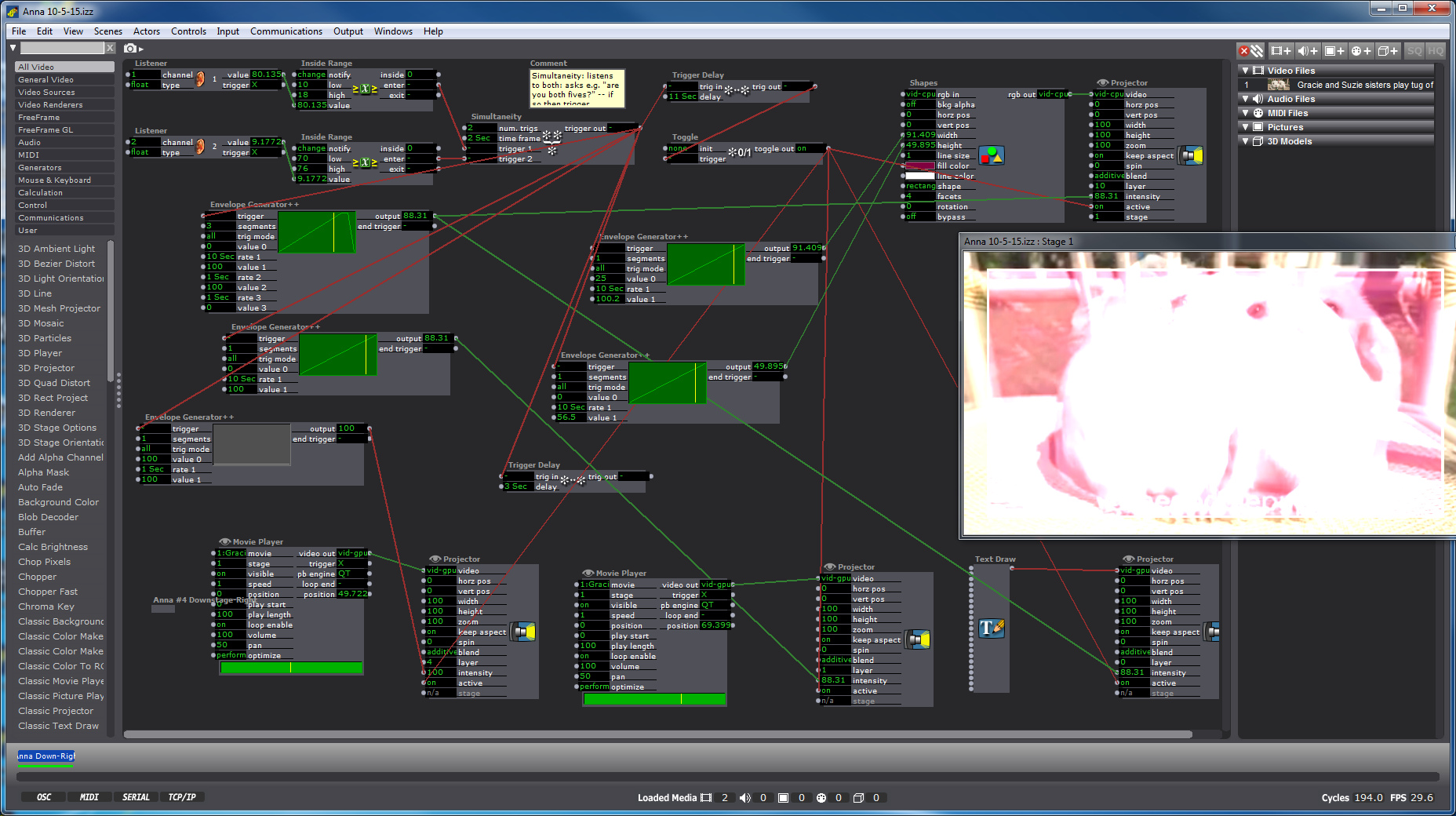

Considering this newly inanimate object as a source, I discovered the “Text Draw” actor, and chose “You are alive” as the text to appear projected when the object moved into the x-y grid space indicated through our initial measurements. (Yes, I found this funny.) My “Listener” actor intercepted the channels “1” and “2” which we had our set-up in our CV frame to “Broadcast” the incoming light data, to which I applied the “Inside range” actor as a way of beginning to inform Isadora which data would trigger my words to appear. I did a quick youtube search of “slow motion,” and found a creepy guinea pig video that because of its single shot and stationary subjects, appeared to be possibly smooth fodder for looping. I layered two of these videos on a slight delay to give them a ghostly appearance, then added an overlay of red via the shapes actor.

As we imported our video/sound/image files to accompany our Isadora patches into the main frame, we discovered that our patches were being triggered, but were failing to end once the object had departed our specific x-y coordinates as demarcated for ourselves on the floor, but more importantly as indicated by our “Inside Range” actors. We realized that our initial measurements needed to be refined more precisely, and with that shift, my own actor was working successfully, but we were still faced with difficulty in changing the coordinates on my colleague’s actor as he had multiple user actors embedded in user actors that continued to run parts of his patch independently. The possibility of enlisting a “Shapes” actor measured to create a projection of all black was considered, but the all-consuming limitation on time kept us from proceeding further. My own patch was limited by the absence of a “Comparator” as a means of refining the coordinates so that they might toggle on and off.